I collaborated with a small team under tight time constraints to enhance the documents module of a SaaS platform.

We improved task success rates and provided recommendations for incorporating Artificial Intelligence.

COMPANY OVERVIEW

Our client was BoardSpace, a SaaS platform built for volunteer boards of directors.

BoardSpace’s clients are primarily homeowners’ associations, condo associations, and non-profit organizations.

The platform helps boards organize their documents, structure meetings, and manage action items. We were focused on the documents module.

PROJECT SUMMARY

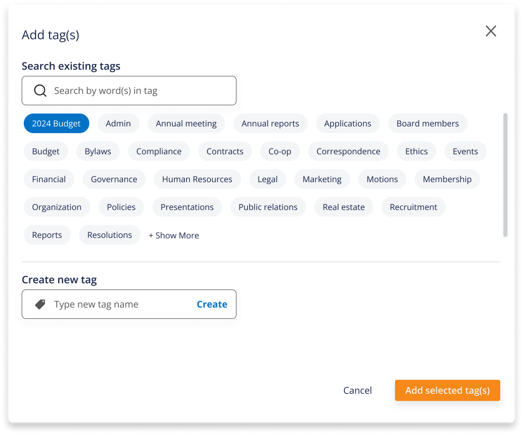

A core feature of BoardSpace’s documents module is the ability to categorize documents using one or more labels, or tags.

Our client had 3 primary goals.

Improve the user flow for uploading and tagging documents.

Why? Only 38% were able to successfully upload and tag a document during previous usability testing.

1

Implement auto-tagging using Artificial Intelligence.

Why? Tagging functionality was significantly under-utilized/poorly utilized.

2

Modernize the visual appearance of the documents module.

Why? CEO was “sick of looking at it” – which meant users probably were, too!

3

ROLES & RESPONSIBILITIES

I collaborated remotely with 2 other UX Designers on all aspects of the project, and we each specialized in an area.

TIMELINE & SCOPE

To achieve everything within our 4-week timeline, we split the project into two phases: without auto-tagging and with auto-tagging.

This corresponded with the client’s plan to implement the changes ot hte documents module in 2 stages – and allowed us to conduct the first round of usability testing before our research was complete.

PRODUCT REVIEW & GAP ANALYSIS

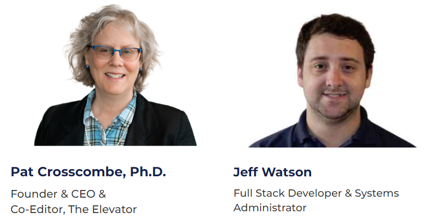

First, we had a kick-off meeting with the client to establish their goals.

The CEO and Lead Developer walked us through:

The user flow of the documents module.

The working prototype from the prior team, and some areas where they wanted modifications.

RESEARCH

We conducted research to learn about auto-tagging, what people did and didn’t want from it, and how other companies had implemented similar functionality using AI.

We took a 3-pronged approach to gathering information about the best way to implement this technology.

Secondary research

Interviews

Competitive analysis

SECONDARY RESEARCH

We familiarized ourselves with the basics of AI-based tagging.

Benefits of AI-based tagging:

Efficiency

Consistency

Searchability

Options for implementation:

Use a pre-existing model as is.

Provide training data and standardized set of tags.

INTERVIEWS

We talked to target users, as well as experts in the field of AI.

What we learned…

…from target users:

Auto-tagging should be optional.

Users want to be able to undo auto-tagging and manually add more tags.

…from AI experts:

Instruct the model to use the file name in addition to file content when analyzing.

Run models locally to prevent confidential information from being incorporated into the training model.

COMPETITIVE ANALYSIS

We studied similar platforms to see how they handled document organization and AI-based support tools.

A couple new things we discovered:

Auto-tagging has more commonly been applied to images.

Set a maximum number of tags because the model may apply too many tags to a single document.

USABILITY TESTING

I conducted 2 rounds of usability testing, prior to incorporating auto-tagging and after adding the new functionality.

The client provided a user person, and test participants were selected who matched at least two of the following criteria:

Have served on a board of directors

40 to 80 years of age

Limited computer proficiency

“When you’re on a board, everything is meeting-driven.”

“Tools with more functionality than the board needs just overcomplicate things.”

“This is super comprehensive. I particularly like the tags and linked meetings.”

“Auto-tagging would be a time-saver.”

ITERATION: WHAT & WHY

Based on everything we learned from the client, our research, and usability testing, we made changes to the prototype.

The changes pertained to 4 main areas:

Main documents screen

Document search

Error handling during document upload

Document tagging and AI implementation

Main Documents Screen

1 & 2 The client didn’t think the “Recent Documents” section or the “Uploaded by” column delivered value. We removed both, and added a sortable “Date Uploaded” column instead. The person who uploaded the document would still be viewable through the kebab menu.

3 There were 7 icons in each document row! Less frequently used icons were moved into the kebab menu.

Document Search

4 Some test participants were confused by what could be entered in the search bar. We clarified the wording.

5 Many participants didn’t see the filter icon on the far right of the search bar, or didn’t know what it meant. We added a label for clarification. This also enlarged the area, making it more noticeable.

6 Participants were confused by the date search. We split the options into searching by a meeting date or a range of dates the document was uploaded.

Error Handling During Document Upload

7 Users were being prompted to “resize” documents over 20 MB, and most test participants didn’t know how to do that. We added a link to instructions for compressing files.

8 Participants tried clicking on the red “Rename document” message when a duplicate file name was flagged. We made it a click target that opened the same modal as the pencil icon. We also cllarified the modal text and gave the user the option of removing the file.

Document Tagging & AI Implementation

9 Many test participants didn’t notice the option to select all documents. We moved it directly above the file list, enlarged it, and changed it to a contrasting color.

10 The heavy lifting of the AI-powered auto-tagging would be done on the back end. Users would only see a simple auto-tag button. Based on feedback from user testing, we clarified the wording and enlarged the button for better visibility.

Below is a demo of the final user flow for uploading and auto-tagging documents.

BoardSpace Demo

KEY PERFORMANCE INDICATORS

A prior team had conducted usability testing on the live BoardSpace platform. I compared task success rates from the second round of usability testing to the results from the live site.

Our modifications to the search by tag functionality missed the mark, and I had some ideas for improvement.

The other changes all improved task success rates.

CLIENT HAND-OFF

At the conclusion of the project, we presented our final designs to the CEO and Lead Developer.

Client Rating: 5/5

"It was fantastic working with Anne, Gisal, and Joe. They worked under tight timelines and showed tremendous dedication to exceeding expectations in our project's achievement. They demonstrated a strong work ethic in completing the project and displayed tremendous creativity and problem-solving skills in producing solid recommendations. I can’t wait to implement their designs."

-Pat Crosscombe, Founder & CEO of BoardSpace

OUTCOME

Revisiting our client’s primary goals, we declared the project a success!

Improve the user flow for uploading and tagging documents.

Achieved.

Document upload success rate increased from 38% to 80%.

1

Implement auto-tagging using Artificial Intelligence.

Achieved.

Designs included carefully integrated AI functionality, along with business recommendations.

2

Modernize the visual appearance of the documents module.

Deferred.

The client wasn’t ready to update all modules, and they needed to be consistent.

3

NEXT STEPS

We also provided the client with our recommendations for continuing the project.

Implementing AI-powered auto-tagging:

Provide clear information to increase adoption of auto-tagging.

Address security concerns proactively.

Accessibility considerations:

Buttons throughout the platform used white text on their orange brand color, which doesn’t meet WCAG AA guidelines.

Use large text whenever possible – the average BoardSpace user is older than the general population, and usability test participants responded positively to it.

LESSONS LEARNED

If I had a do-over, there are a few things I would approach differently.

I should have viewed my client as another user type because they operate the platform’s back end.

With future short-term projects, I’ll make sure the client has everything I need – in the format I need – prior to getting started.

I’ll always be prepared for the client to change their mind, even late in the process.

View other case studies: